Part A

Part 1: Shoot the Pictures

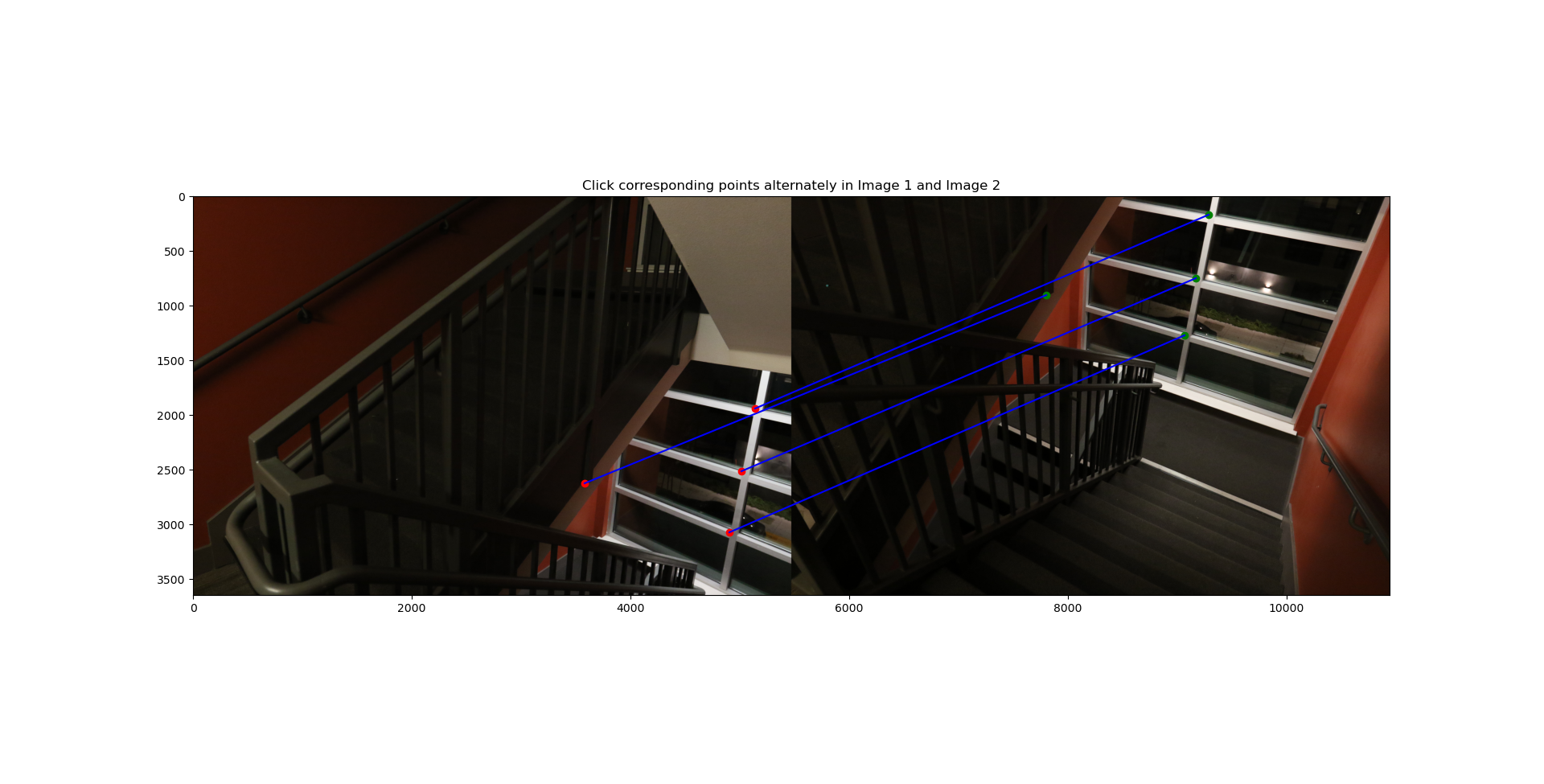

Part 2: Recovering Homographies

Part 3: Warp the Images

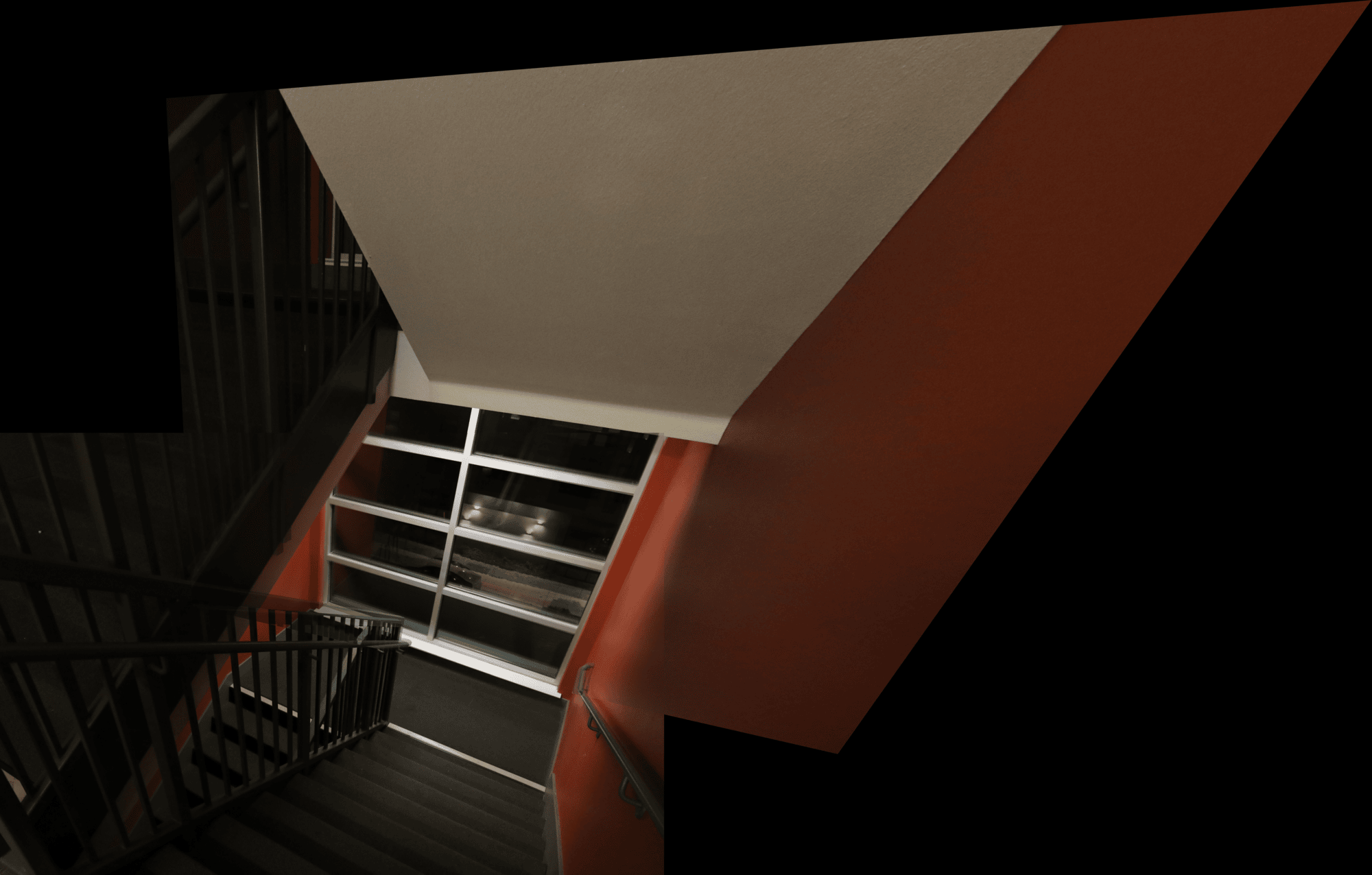

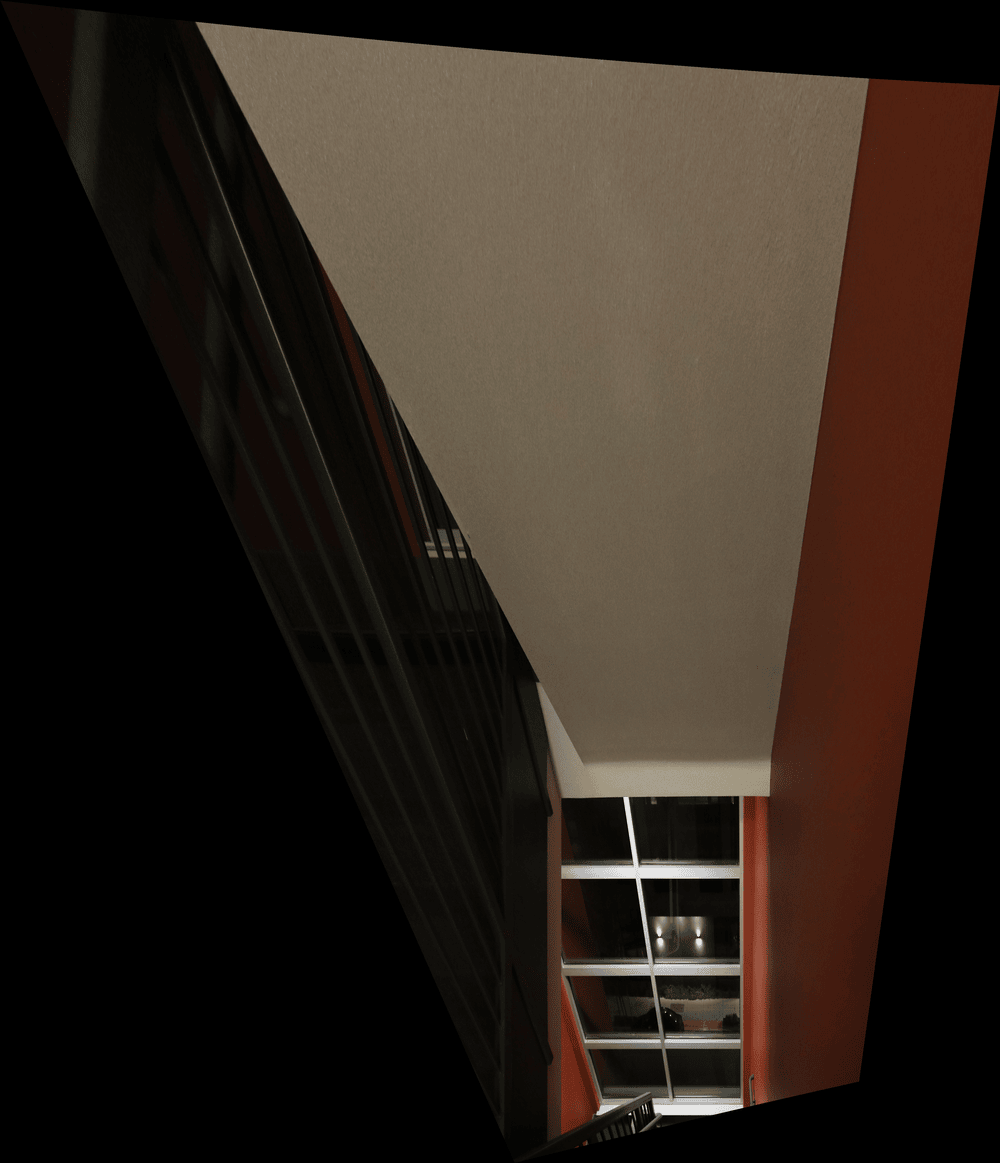

Part 4: Image Rectification

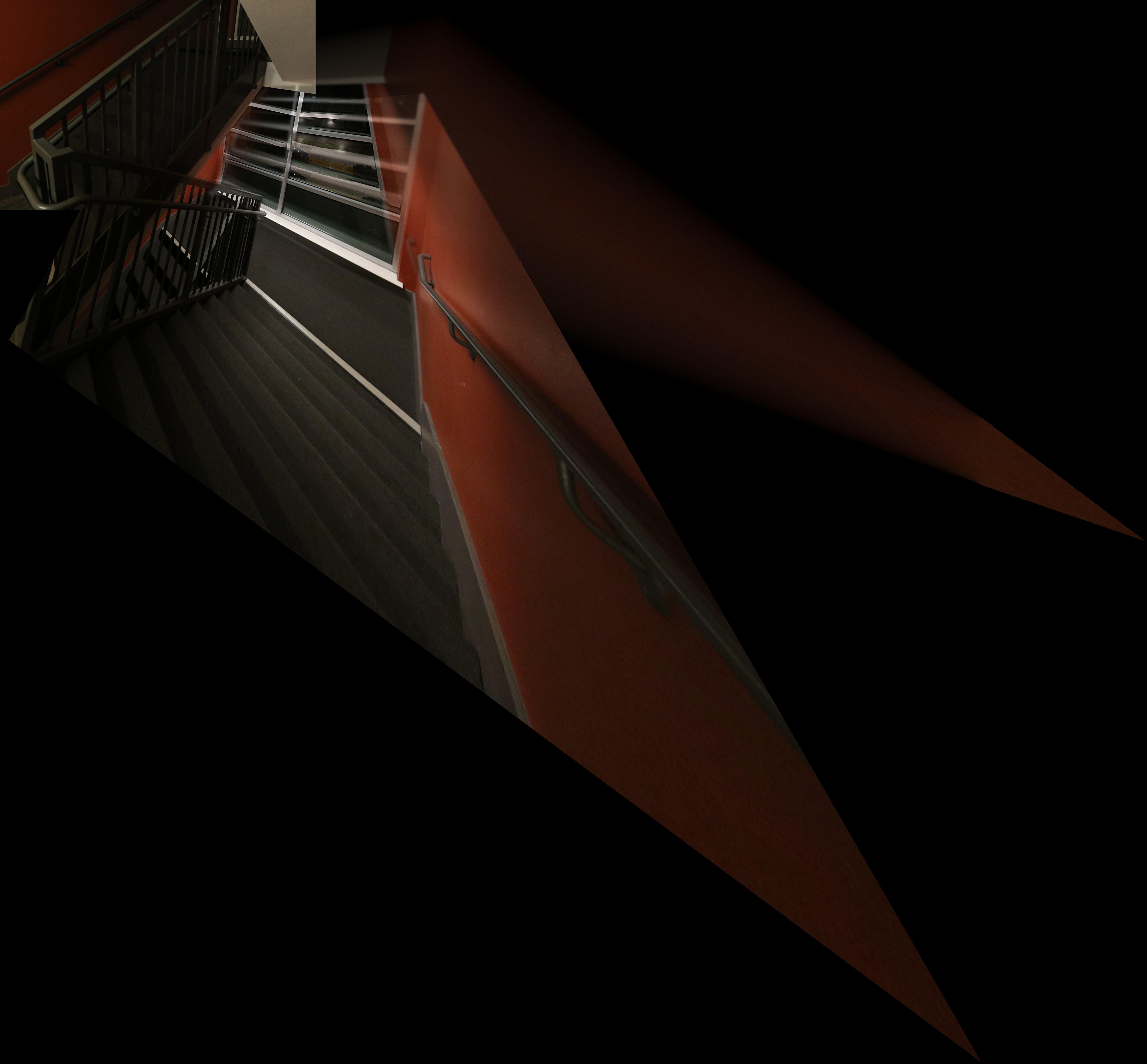

Part 5: Blend the images into a mosaic

Part B

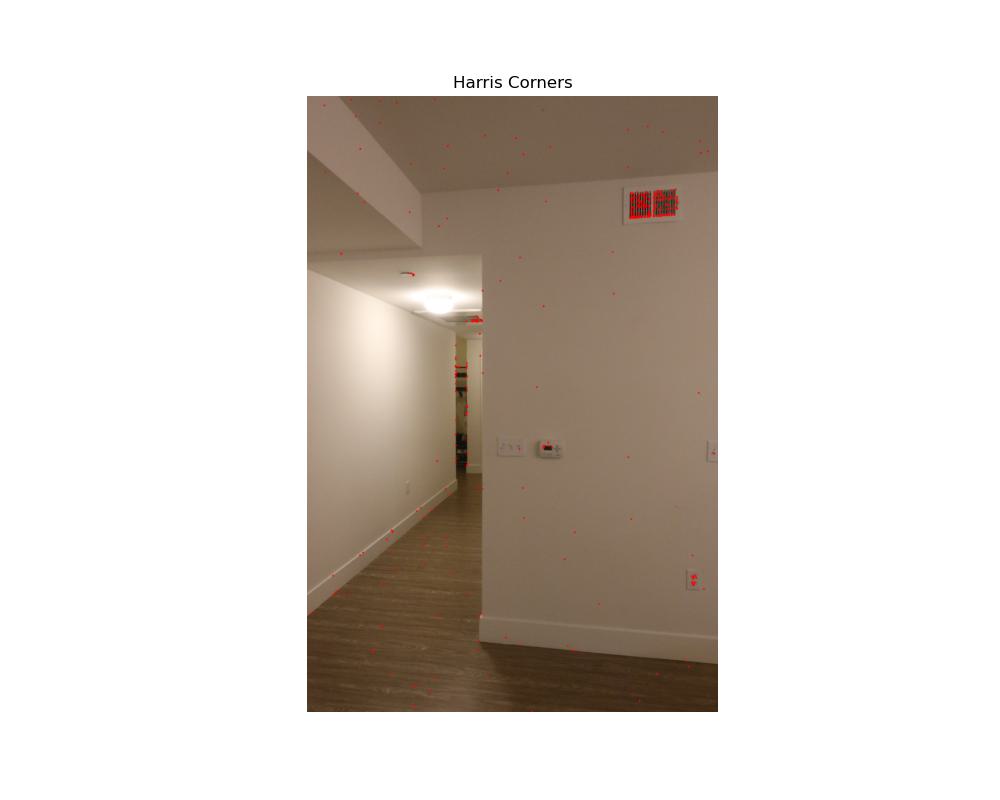

Part 6: Detecting Corner Features

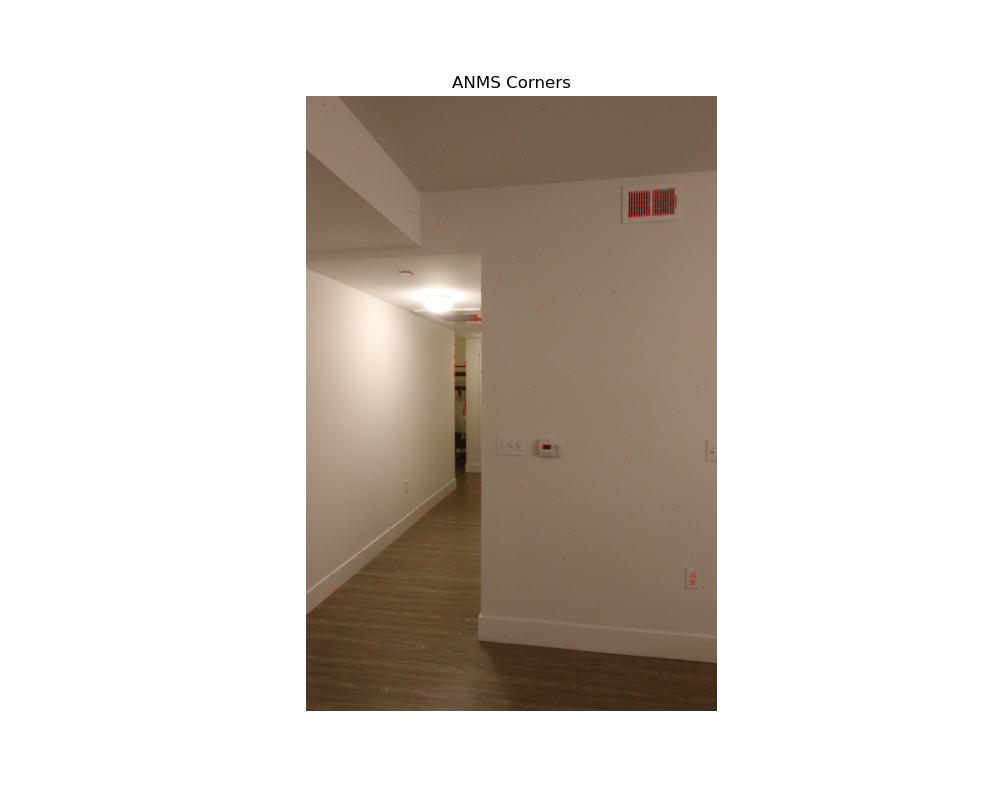

Part 7: ANMS

After detecting the corners, I implemented Adaptive Non-Maximal Suppression (ANMS) to select a subset of the most prominent corner features. ANMS helps retain spatially well-distributed keypoints by suppressing weaker ones that are close to stronger ones. This process ensures that our selected corners are not only strong but also cover the image evenly. The results of ANMS are shown in the figure below, where the chosen corners are overlaid on the image.

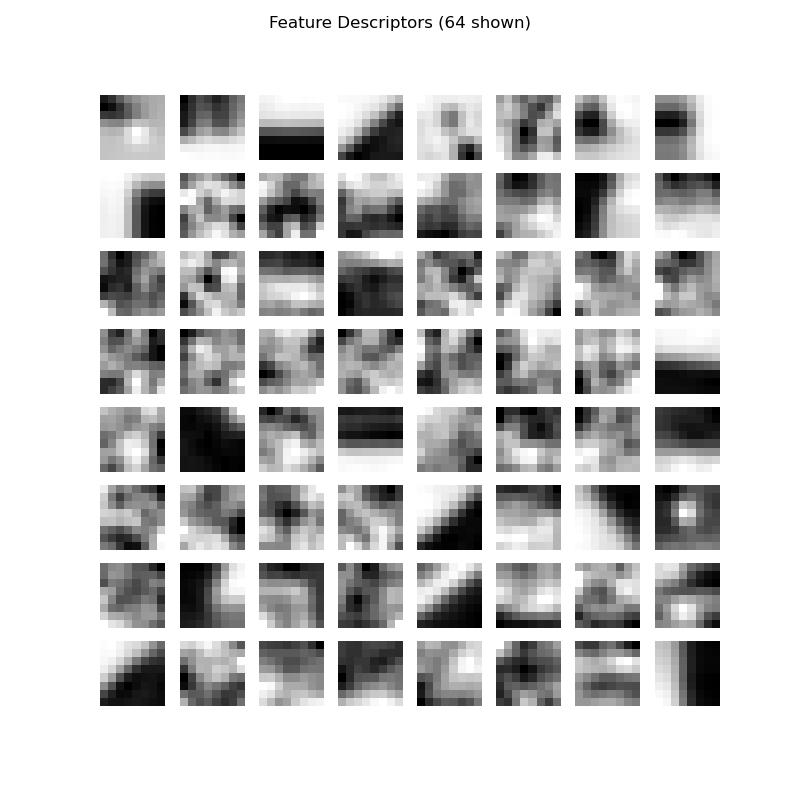

Part 8: Extracting a Feature Descriptor

Next, I extracted feature descriptors for each keypoint. Following the approach from the paper, I sampled 8x8 patches from a larger 40x40 window around each keypoint to capture more context. These patches were then bias and gain normalized to ensure that they are invariant to illumination changes. The result is a set of feature vectors that describe the local image structure around each keypoint. The figure below shows a visualization of the feature descriptors extracted from the image.

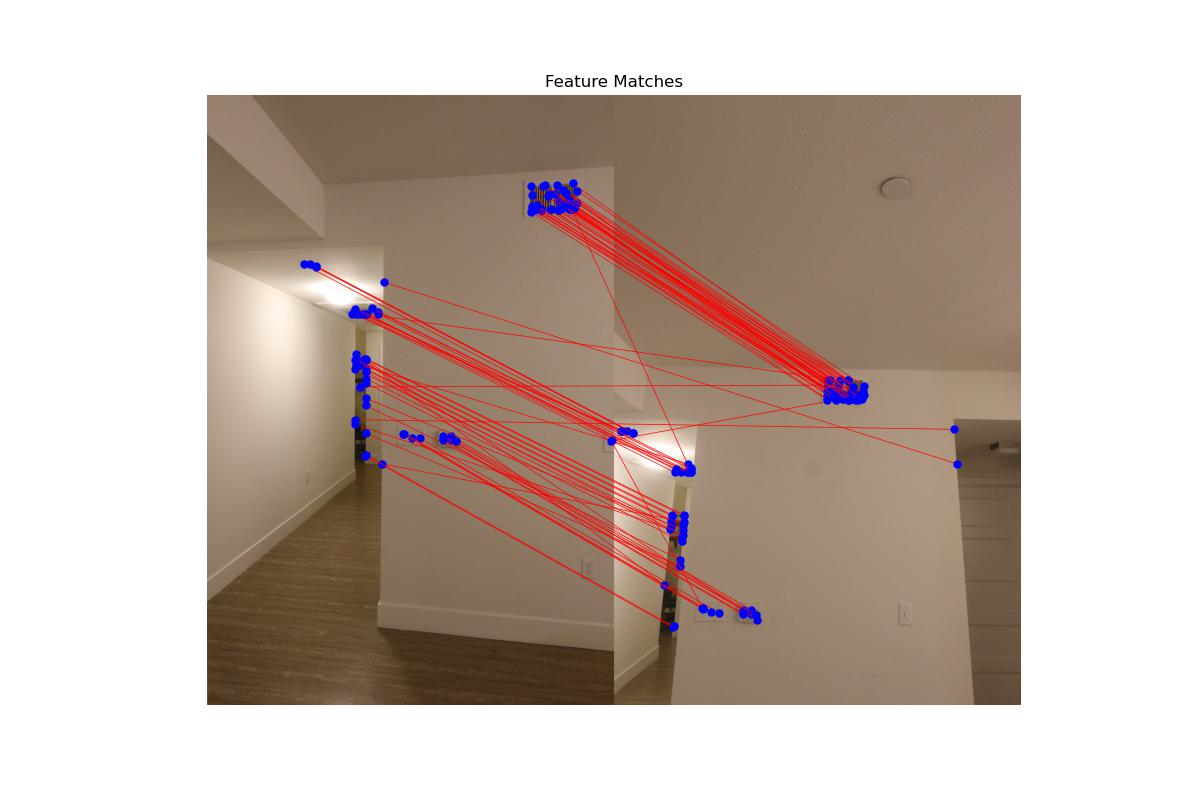

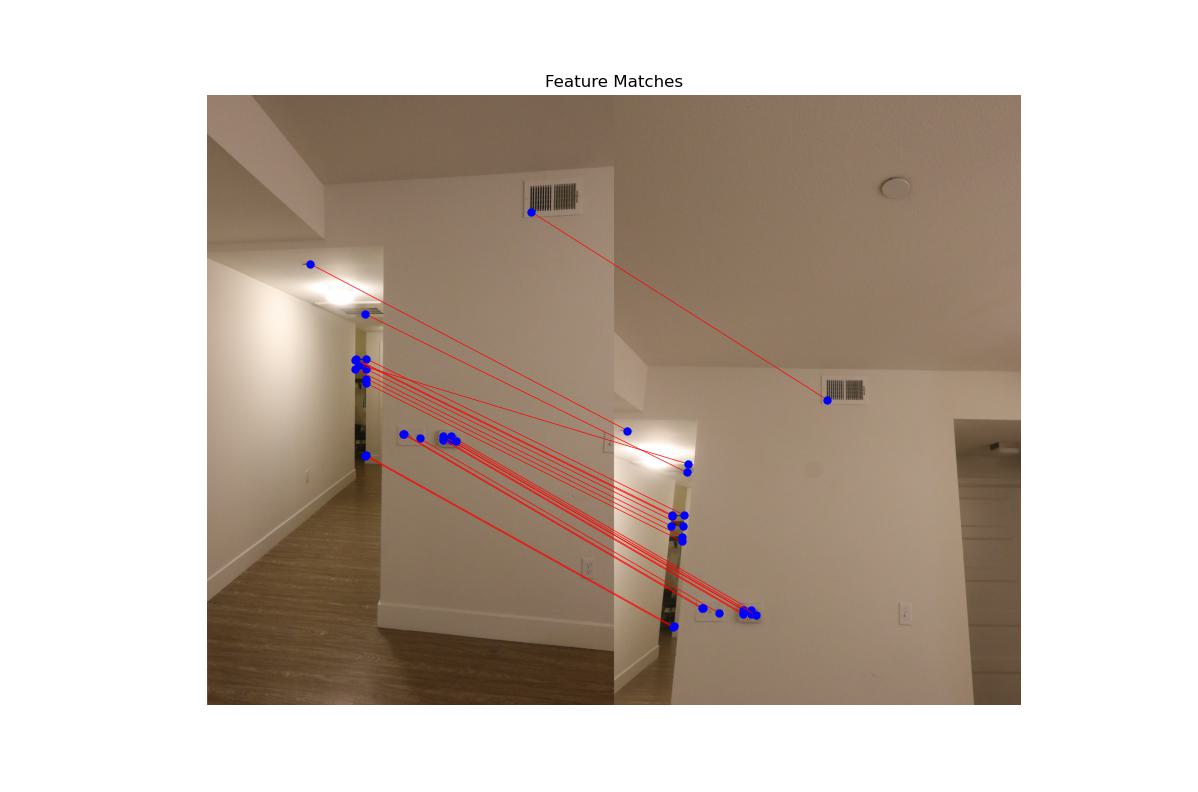

Part 9: Matching Descriptor

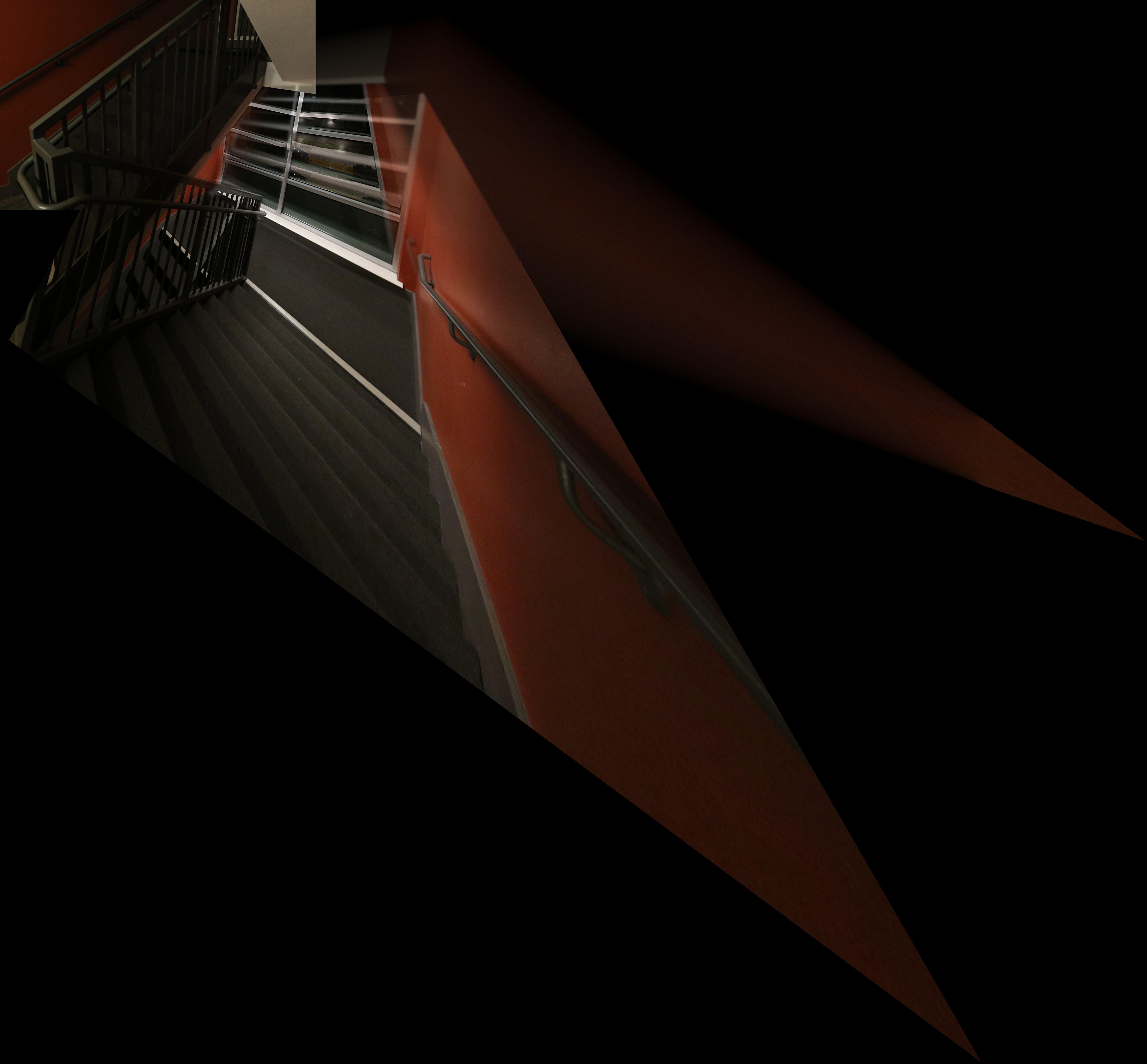

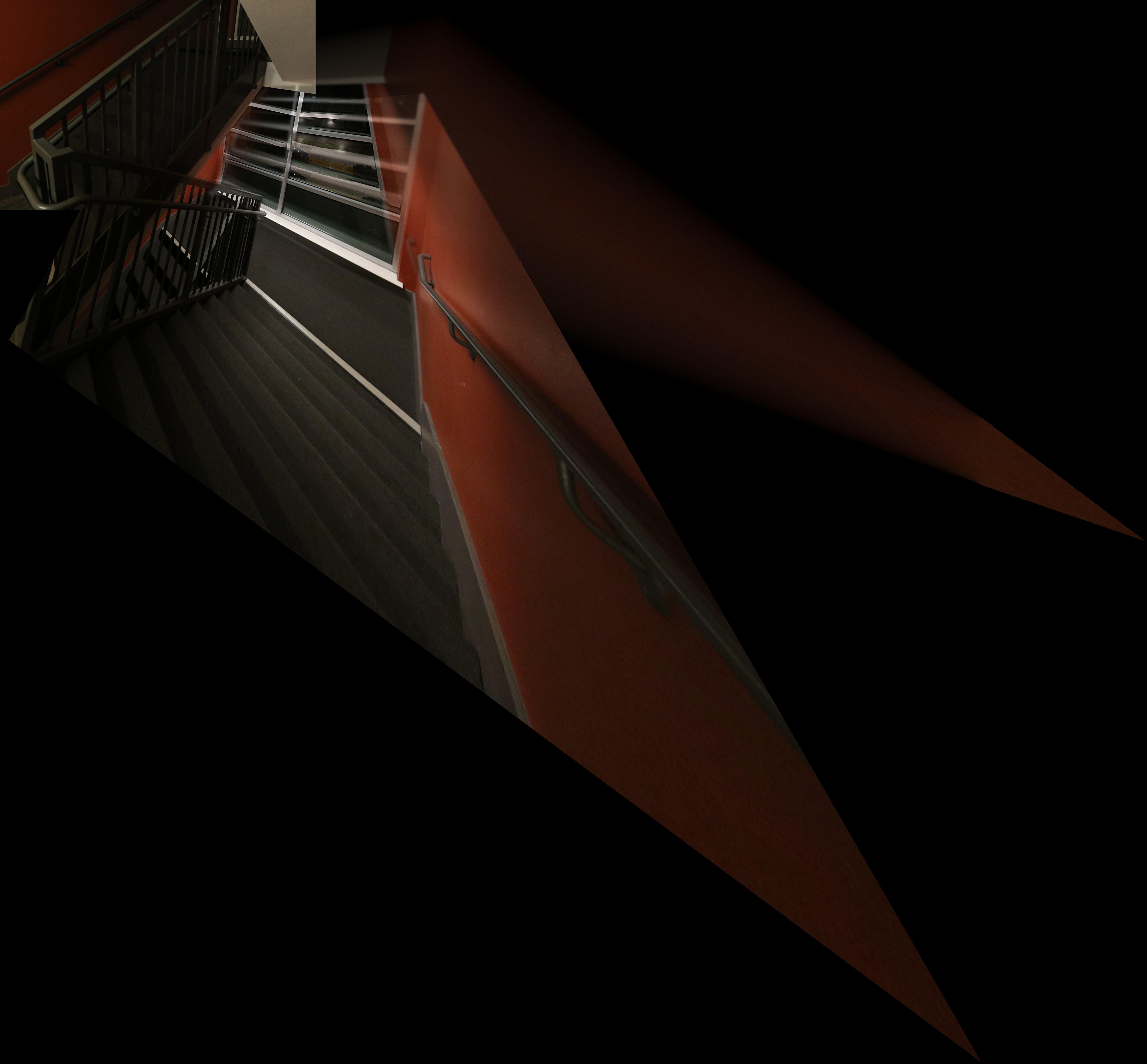

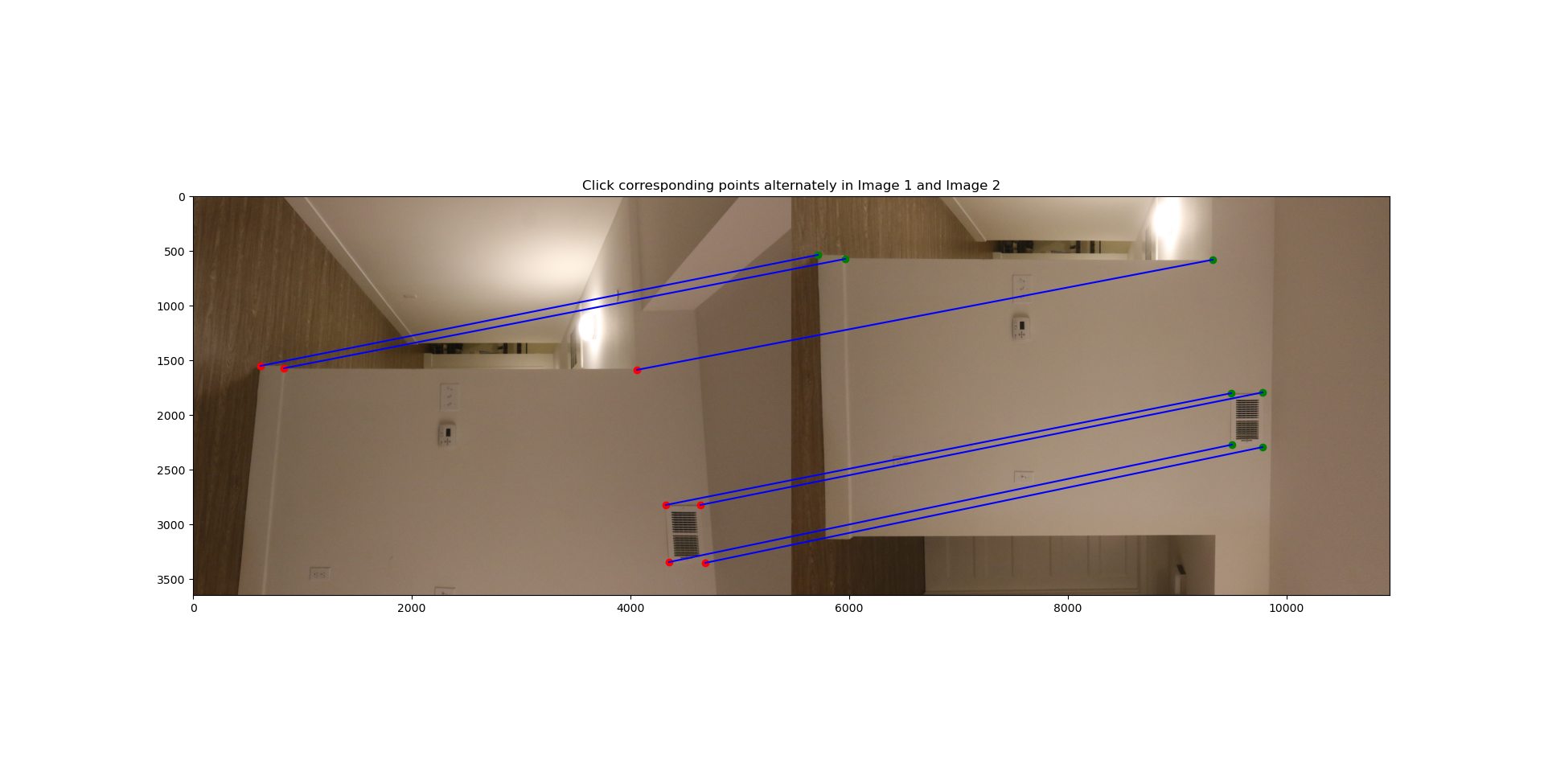

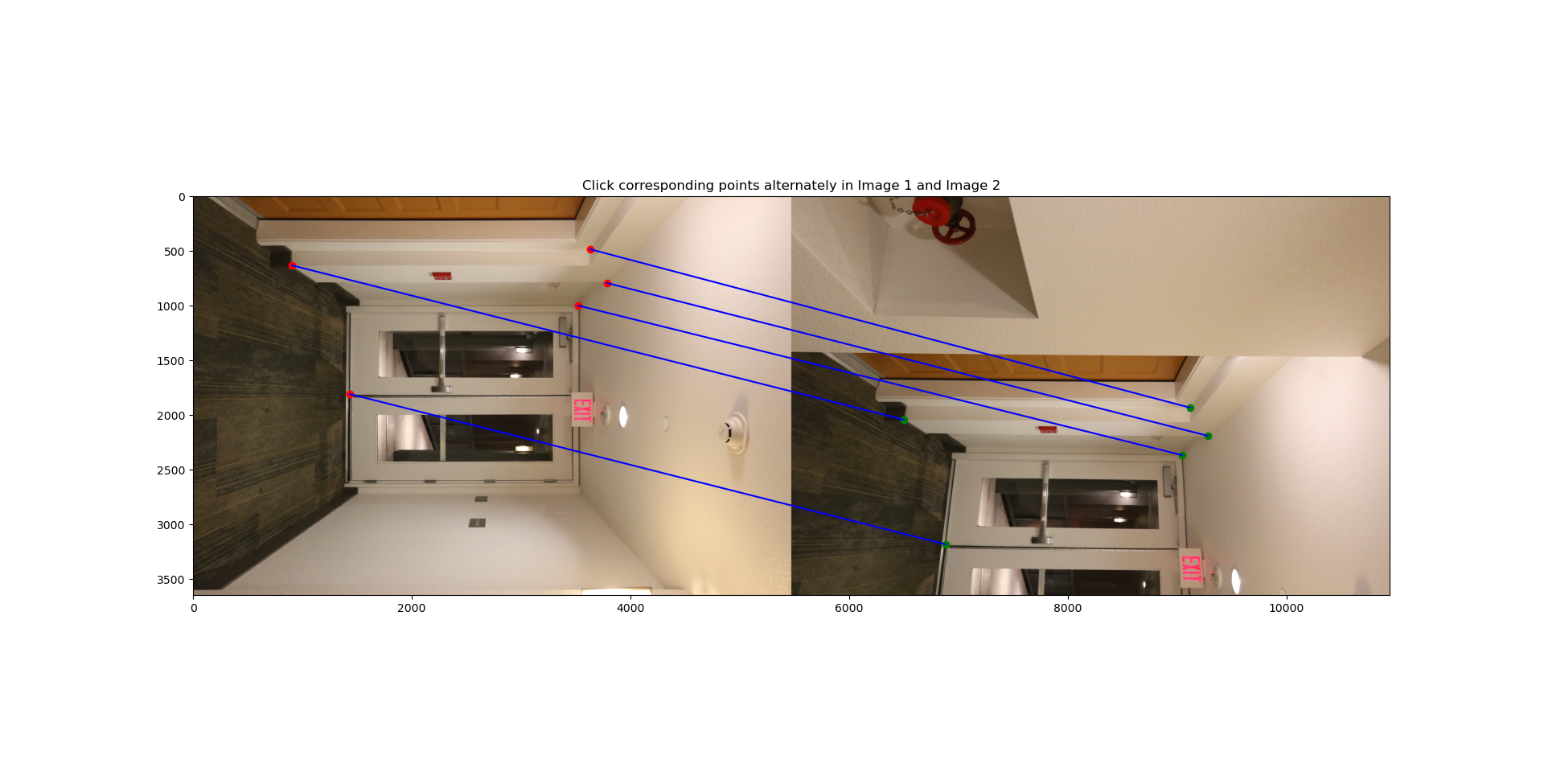

Part 10: Three Mosaics

Finally, I used a robust method (RANSAC) to compute a homography between the matched features of the two images. The homography allows us to align the images and create a mosaic. I produced three different mosaics: one manually stitched and two automatically stitched using the described method. The resulting mosaics are displayed below.